ARC Prize Survives Three Months

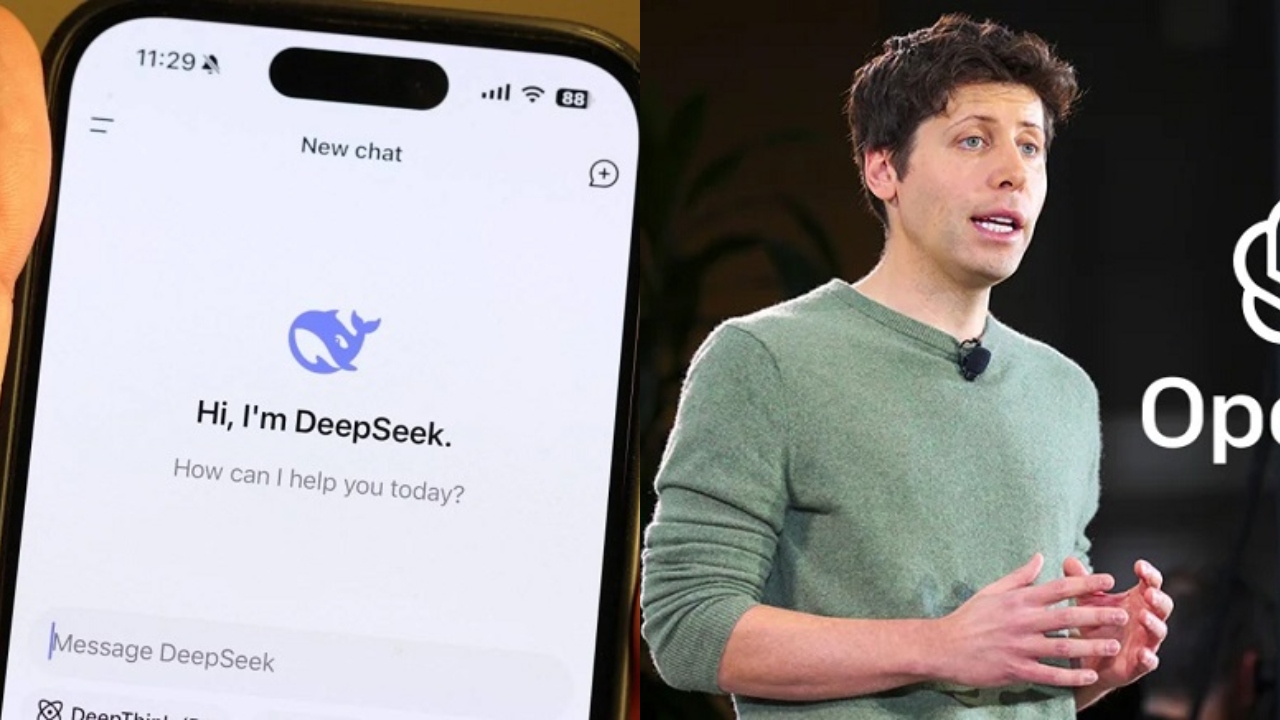

In conclusion, as companies more and more rely on large volumes of knowledge for determination-making processes; platforms like DeepSeek are proving indispensable in revolutionizing how we discover information effectively. Having these massive fashions is good, however very few elementary points might be solved with this. But now that DeepSeek has moved from an outlier and fully into the general public consciousness - simply as OpenAI found itself just a few brief years in the past - its real test has begun. So I began digging into self-internet hosting AI fashions and rapidly found out that Ollama could help with that, I also appeared by way of varied different methods to start using the huge amount of fashions on Huggingface however all roads led to Rome. So with the whole lot I examine models, I figured if I may discover a model with a really low quantity of parameters I might get one thing worth utilizing, but the factor is low parameter rely ends in worse output. As the mannequin processes new tokens, these slots dynamically replace, sustaining context with out inflating memory usage.

In conclusion, as companies more and more rely on large volumes of knowledge for determination-making processes; platforms like DeepSeek are proving indispensable in revolutionizing how we discover information effectively. Having these massive fashions is good, however very few elementary points might be solved with this. But now that DeepSeek has moved from an outlier and fully into the general public consciousness - simply as OpenAI found itself just a few brief years in the past - its real test has begun. So I began digging into self-internet hosting AI fashions and rapidly found out that Ollama could help with that, I also appeared by way of varied different methods to start using the huge amount of fashions on Huggingface however all roads led to Rome. So with the whole lot I examine models, I figured if I may discover a model with a really low quantity of parameters I might get one thing worth utilizing, but the factor is low parameter rely ends in worse output. As the mannequin processes new tokens, these slots dynamically replace, sustaining context with out inflating memory usage.

By intelligently adjusting precision to match the requirements of each task, DeepSeek-V3 reduces GPU memory utilization and hurries up training, all without compromising numerical stability and performance. Broadly the management model of 赛马, ‘horse racing’ or a bake-off in a western context, where you've people or teams compete to execute on the identical process, has been frequent throughout high software corporations. And that is when you will have to take a look at individual firms, go out, go to China, meet with the manufacturing facility managers, the parents working on an R&D. I nonetheless suppose they’re value having on this listing due to the sheer number of fashions they have out there with no setup on your finish apart from of the API. They were saying, "Oh, it should be Monte Carlo tree search, or some other favorite tutorial technique," but individuals didn’t wish to imagine it was basically reinforcement learning-the model figuring out by itself the right way to suppose and chain its thoughts. H20's are much less efficient for training and more efficient for sampling - and are still allowed, though I think they needs to be banned.

By intelligently adjusting precision to match the requirements of each task, DeepSeek-V3 reduces GPU memory utilization and hurries up training, all without compromising numerical stability and performance. Broadly the management model of 赛马, ‘horse racing’ or a bake-off in a western context, where you've people or teams compete to execute on the identical process, has been frequent throughout high software corporations. And that is when you will have to take a look at individual firms, go out, go to China, meet with the manufacturing facility managers, the parents working on an R&D. I nonetheless suppose they’re value having on this listing due to the sheer number of fashions they have out there with no setup on your finish apart from of the API. They were saying, "Oh, it should be Monte Carlo tree search, or some other favorite tutorial technique," but individuals didn’t wish to imagine it was basically reinforcement learning-the model figuring out by itself the right way to suppose and chain its thoughts. H20's are much less efficient for training and more efficient for sampling - and are still allowed, though I think they needs to be banned.

Scales are quantized with 6 bits. All indications are that they Finally take it seriously after it has been made financially painful for them, the one method to get their attention about anything anymore. Unlike traditional LLMs that rely on Transformer architectures which requires reminiscence-intensive caches for storing uncooked key-value (KV), DeepSeek-V3 employs an innovative Multi-Head Latent Attention (MHLA) mechanism. The CodeUpdateArena benchmark represents an necessary step ahead in evaluating the capabilities of large language fashions (LLMs) to handle evolving code APIs, a essential limitation of present approaches. The idiom "death by a thousand papercuts" is used to explain a state of affairs the place a person or entity is slowly worn down or defeated by a large number of small, seemingly insignificant issues or annoyances, slightly than by one major issue. On average, conversations with Pi last 33 minutes, with one in ten lasting over an hour every day. Self-hosted LLMs provide unparalleled advantages over their hosted counterparts. Existing LLMs make the most of the transformer architecture as their foundational model design. Step 3. Find the DeepSeek model you set up. Namely that it's a number record, and each item is a step that is executable as a subtask.

OpenAI is the example that's most often used all through the Open WebUI docs, nevertheless they can support any number of OpenAI-suitable APIs. There are a variety of features of ARC-AGI that would use improvement. This improvement turns into significantly evident in the more challenging subsets of duties. Looking ahead, we can anticipate much more integrations with rising applied sciences comparable to blockchain for enhanced security or augmented actuality purposes that could redefine how we visualize information. As expertise continues to evolve at a speedy pace, so does the potential for tools like DeepSeek r1 to shape the longer term landscape of data discovery and search applied sciences. As Inflection AI continues to push the boundaries of what is feasible with LLMs, the AI community eagerly anticipates the next wave of innovations and breakthroughs from this trailblazing company. This integration marks a major milestone in Inflection AI's mission to create a personal AI for everybody, combining raw functionality with their signature empathetic personality and safety requirements. The success of Inflection-1 and the fast scaling of the company's computing infrastructure, fueled by the substantial funding round, spotlight Inflection AI's unwavering dedication to delivering on its mission of creating a personal AI for everybody. Inflection AI's visionary strategy extends beyond mere model improvement, as the corporate acknowledges the significance of pre-coaching and fine-tuning in creating excessive-high quality, secure, and useful AI experiences.

In the event you beloved this informative article and you desire to obtain more information regarding Free DeepSeek r1 i implore you to check out our own website.