9 Fb Pages To Follow About Deepseek

OpenAI has been the undisputed leader within the AI race, but DeepSeek has recently stolen a number of the spotlight. On Codeforces, OpenAI o1-1217 leads with 96.6%, while DeepSeek-R1 achieves 96.3%. This benchmark evaluates coding and algorithmic reasoning capabilities. Beyond self-rewarding, we're also devoted to uncovering different basic and scalable rewarding methods to persistently advance the mannequin capabilities in general situations. Hermes-2-Theta-Llama-3-8B is a reducing-edge language model created by Nous Research. Mandarin and Arabic. ???? 3️⃣ Custom Filters: Sort results by date, credibility, or format (e.g., video, research papers). Users can select the "DeepThink" characteristic earlier than submitting a query to get results utilizing Deepseek-R1’s reasoning capabilities. We might just be recomputing outcomes we’ve already obtained previously and discarded. In response to the stories, DeepSeek's value to train its latest R1 mannequin was just $5.58 million. OpenAI's CEO, Sam Altman, has additionally stated that the price was over $one hundred million. Released under the MIT License, DeepSeek-R1 supplies responses comparable to different contemporary giant language fashions, corresponding to OpenAI's GPT-4o and o1.

OpenAI has been the undisputed leader within the AI race, but DeepSeek has recently stolen a number of the spotlight. On Codeforces, OpenAI o1-1217 leads with 96.6%, while DeepSeek-R1 achieves 96.3%. This benchmark evaluates coding and algorithmic reasoning capabilities. Beyond self-rewarding, we're also devoted to uncovering different basic and scalable rewarding methods to persistently advance the mannequin capabilities in general situations. Hermes-2-Theta-Llama-3-8B is a reducing-edge language model created by Nous Research. Mandarin and Arabic. ???? 3️⃣ Custom Filters: Sort results by date, credibility, or format (e.g., video, research papers). Users can select the "DeepThink" characteristic earlier than submitting a query to get results utilizing Deepseek-R1’s reasoning capabilities. We might just be recomputing outcomes we’ve already obtained previously and discarded. In response to the stories, DeepSeek's value to train its latest R1 mannequin was just $5.58 million. OpenAI's CEO, Sam Altman, has additionally stated that the price was over $one hundred million. Released under the MIT License, DeepSeek-R1 supplies responses comparable to different contemporary giant language fashions, corresponding to OpenAI's GPT-4o and o1.

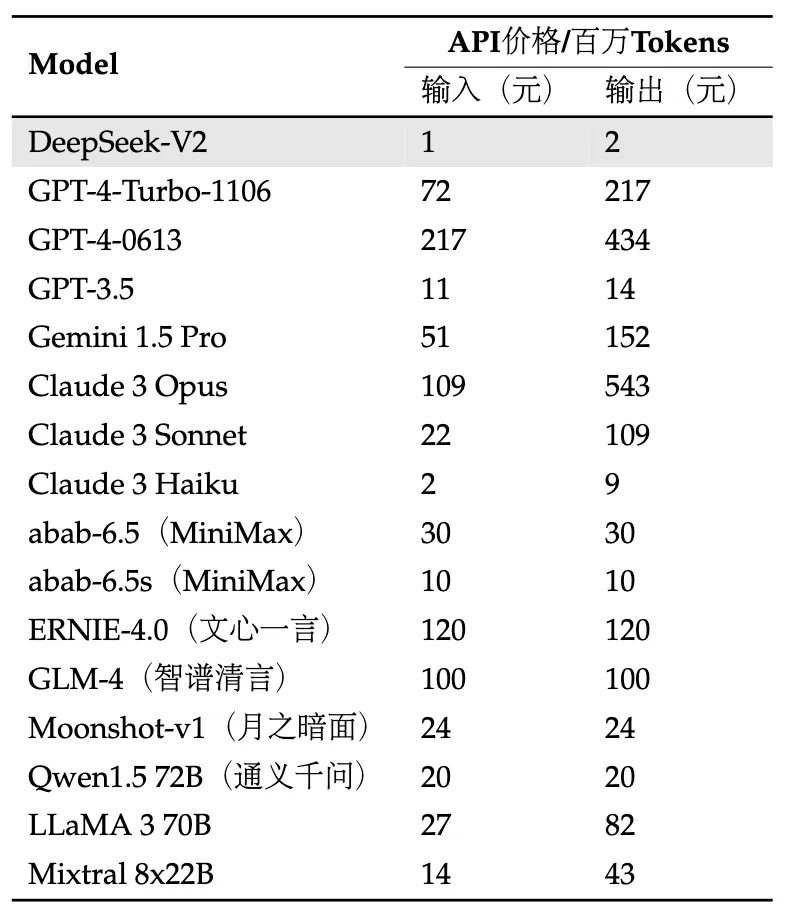

DeepSeek's pricing is significantly decrease throughout the board, with enter and output prices a fraction of what OpenAI costs for GPT-4o. However, DeepSeek's development then accelerated dramatically. Explore competitors’ web site traffic stats, discover growth points, and broaden your market share. As I stated above, DeepSeek online had a average-to-giant number of chips, so it is not stunning that they have been able to develop and then train a powerful mannequin. We then train a reward mannequin (RM) on this dataset to predict which mannequin output our labelers would favor. Below, we highlight efficiency benchmarks for each mannequin and present how they stack up against one another in key categories: mathematics, coding, and common data. In truth, it beats out OpenAI in both key benchmarks. In reality, this mannequin is a robust argument that synthetic coaching knowledge can be utilized to nice impact in building AI models. DeepSeek-Coder-V2 expanded the capabilities of the original coding model. Both fashions show strong coding capabilities.

DeepSeek's pricing is significantly decrease throughout the board, with enter and output prices a fraction of what OpenAI costs for GPT-4o. However, DeepSeek's development then accelerated dramatically. Explore competitors’ web site traffic stats, discover growth points, and broaden your market share. As I stated above, DeepSeek online had a average-to-giant number of chips, so it is not stunning that they have been able to develop and then train a powerful mannequin. We then train a reward mannequin (RM) on this dataset to predict which mannequin output our labelers would favor. Below, we highlight efficiency benchmarks for each mannequin and present how they stack up against one another in key categories: mathematics, coding, and common data. In truth, it beats out OpenAI in both key benchmarks. In reality, this mannequin is a robust argument that synthetic coaching knowledge can be utilized to nice impact in building AI models. DeepSeek-Coder-V2 expanded the capabilities of the original coding model. Both fashions show strong coding capabilities.

DeepSeek-R1 is the company's latest model, focusing on superior reasoning capabilities. While OpenAI's o1 maintains a slight edge in coding and factual reasoning tasks, Deepseek Online chat online-R1's open-source access and low prices are appealing to users. For MATH-500, DeepSeek-R1 leads with 97.3%, compared to OpenAI o1-1217's 96.4%. This check covers numerous excessive-school-level mathematical problems requiring detailed reasoning. DeepSeek-R1 is a worthy OpenAI competitor, specifically in reasoning-focused AI. For MMLU, OpenAI o1-1217 barely outperforms DeepSeek-R1 with 91.8% versus 90.8%. This benchmark evaluates multitask language understanding. The model included superior mixture-of-experts architecture and FP8 mixed precision training, setting new benchmarks in language understanding and price-effective performance. I see this as a kind of innovations that look obvious in retrospect but that require a superb understanding of what consideration heads are actually doing to give you. The explanation low-rank compression is so effective is because there’s loads of knowledge overlap between what different consideration heads have to know about. Low-rank compression, on the other hand, allows the identical data to be utilized in very other ways by totally different heads. In this architectural setting, we assign a number of question heads to every pair of key and worth heads, successfully grouping the question heads collectively - hence the identify of the tactic.

They accomplish this by turning the computation of key and worth vectors from the residual stream right into a two-step process. Because the only approach previous tokens have an affect on future tokens is through their key and value vectors in the eye mechanism, it suffices to cache these vectors. Instead of this, DeepSeek has discovered a approach to scale back the KV cache size with out compromising on quality, at the very least in their internal experiments. What is the KV cache and why does it matter? This rough calculation exhibits why it’s essential to Deep seek out methods to scale back the scale of the KV cache when we’re working with context lengths of 100K or above. We can then shrink the scale of the KV cache by making the latent dimension smaller. You may construct the workflow manually, as shown on this tutorial, or use group templates from the n8n template library. Then, you’ll see all AI fashions from the Hugging Face library.